For the past month, I’ve been working with the organization Human Rights First on their campaign against police brutality. Human Rights First is an independent organization which works to challenge us all to live up to what it means to be American. They’re an amazing organization with almost 40 years of history, fighting to protect the basic rights of human beings. They have a couple of different campaigns, the one which I’ve been a part of being the Police Brutality watch. With the invention of police body cameras and the widespread availability of mobile recording devices, we have seen an unprecedented amount of footage rise regarding police and their use of force on civilians. It’s obvious that the police must be trained for dangerous individuals, but Human Rights First has set out to solve a different problem.

How often is the police use of force actually happening?

How often is the police use of force actually happening?

Through an interactive, live-updating feed in the form of a map of the United States, we’re working to expose all cases of violence between police and civilians. The more exposure we can produce, the more aware the public can be about this issue. We’ve been working on a tool useful for researchers, experts, activists, and anyone who needs or desires to educate themselves on the true history of our nation.

If we only depended on mainstream media, we’re bound to fall victim to the filtering and choosing of only the hardest hitting news stories. By developing a platform independent of outside influence, we can utilize cutting edge technology to develop a tool which is un-biased.

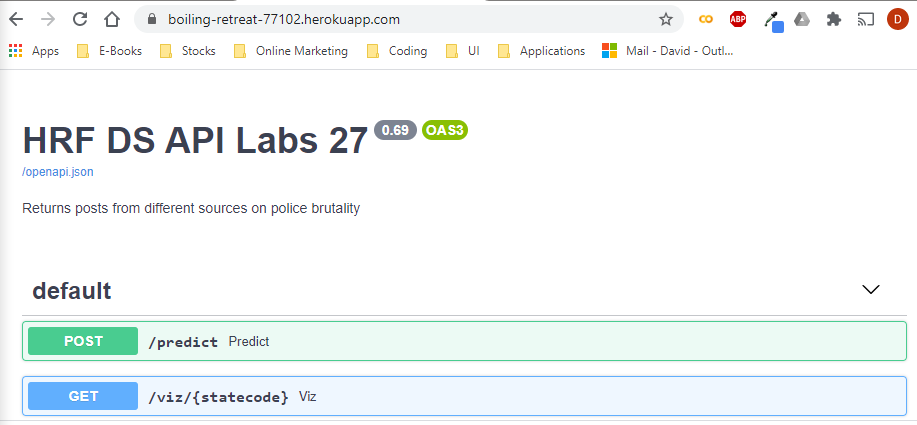

I’m working on the development of the data science API which works to pull raw news stories from various sources, and filter them using a classification model into cases of police brutality. It’s a classification problem because we want to evaluate if there is police brutality or no police brutality. This has been an interesting and deeply impactful project. Every couple of days, while working through potiential sources and stories, I’d come across a new video or story of violence. It’s very difficult to move forward united with peace when the violence and hatred is so abundantly clear.

Obviously, as a data scientist, my job is not to be biased and insert my opinions. We developed an unbiased machine learning model through NLP operations. We then proceed by parsing raw stories into readable text. The resulting parsed story is passed through our model so that a prediction is made, and if the model decides that the story is in fact one of police brutality, it gets marked as so, and is passed on to the web team for visual display.

Technical Takeaways

Over the past 4 weeks I’ve worked on deploying two difference API’s, scraping data utilizing a third party API, and also building out a classification model to serve as a framework for future teams to learn from. Some of the most important features of this project were fairly complex, and required the collaboration of myself with my teammates in order to succeed.

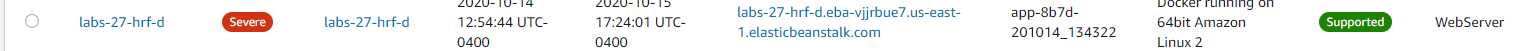

Deploying the application was a major challenge we faced in the tail end of the project. We had originally deployed on Amazon’s Elastic Beanstalk , but exactly two weeks before deadline, backend had notified us that the endpoints had stopped responding. The app was deleted from our list of environments in the AWS Console, and it’s still a mystery as to how the app was destroyed. I’m confident that it was deleted in error by another person working in the same console space, but I nor anyone I work with is in the business of pointing fingers or accepting failure. We began by deploying again to AWS EB, only to be confronted with an error like this.

After troubleshooting this severe health warning, I reached out to my Manager for guidance on what to do. Fixing this was already taking up too much time, and I had to spend some attention on moving other pieces of the project forward. He pointed me in the right direction. After collaborating and planning, we decided to move the project forward and find an alternative to success. That alternative was a deployment to Heroku.

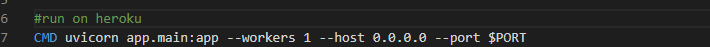

By utilizing the powerful Uvicorn library in combination with docker containers, we successfully built a docker image of the data science API, and deployed it. Uvicorn is an excellent server implementation. It utilizes ASGI for asynchronous calling power, which is the successor of WSGI capabilities. Interestingly, AWS EB uses WSGI. There’s nothing wrong with WSGI, but ASGI is designed to be a superset of WSGI, which allows us to run WSGI applications within an ASGI server if needed.

Here are the steps for deploying a Heroku App using Docker and Uvicorn.

Credit for the process developed in this goes to one of the most helpful instructors and mentors from lambda school, Ryan Herr

Deploying to Heroku

Navigate to your app’s home directory, where your Dockerfile is held.

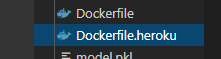

Simply make a Dockerfile copy for heroku to identify with the extension “.heroku”.

Add a command at the end of your dockerfile for uvicorn to communicate with the heroku hosting platform.

After this file is set up, simply run the create command:

heroku create

You should see results telling you when the app is done creating.

Make sure you’re logged into your docker , and then run the following :

heroku container:login

docker build -f ./project/Dockerfile.heroku -t registry.heroku.com/infinite-garden-54629/web ./project

docker push registry.heroku.com/infinite-garden-54629/web:latest

heroku container:release web

Go to https://dashboard.heroku.com/apps and select the app

Go to Settings then Reveal Config Vars

Add any Variables you need for your app in this section.

After you’re all set up in the heroku dashboard, jump back to your terminal and run these commands :

heroku open

heroku logs --tail

This will open your interactive API, and you’ll be able to simultaneously view the logs of your app in your terminal browser. Now’s the time for testing of all routes, and making sure it’s functioning proper.

Conclusions and Features

Some of the things which I implemented and worked for this project:

- A fully functioning API which live endpoint connectivity

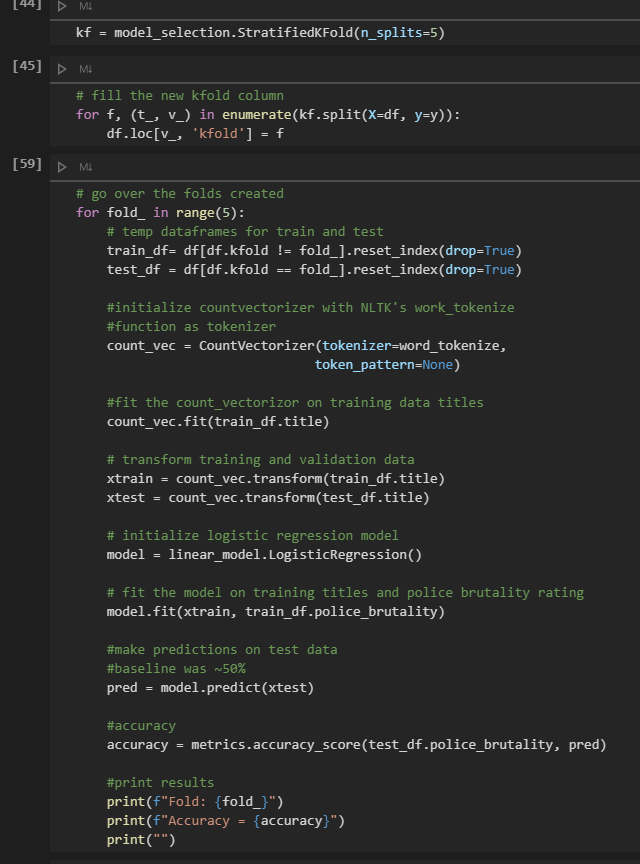

- A reconstructed Classification model using a different approach to NLP

- Statistical data cleaning and organization

- A script for pulling reddit news stories based on relevance to police brutality

The future is bright for Human Rights first. For the future teams which inherit my team’s work, they have many opportunities to expand and improve upon. Potential features in the future could involve incorporating another API like we did with reddit. They could also work to train a different sort of model, to see if their results come out better or worse. Feature importance and principle component analysis is very important for this, and I’m confident that future teams are being provided the proper tools to tackle those optimization opportunities.

There may be challenges in deployment of the API. Seeing as to how AWS EB gave us issues and ended up crashing halfway through, I’m hopeful that the next group doesn’t encounter an issue like this and is able to see this all the way through without having to switch App Hosting platforms.

I’ve learned super valuable lessons in teamwork, clarity, and patience. My peers did an excellent job to remind me of what it means to be human, and we’ve all treated each other with great amounts of respect and courtesy. I loved working with this team, it always felt professional and earnest in everything we did. Through all the hiccups in the road, we remained calm and collected, organized plans, and attacked together as one unit. I’m very excited to carry these traits of teamwork and coordination with me to my next position.